Homework 10¶

Write code to solve all problems. The grading rubric includes the following criteria:

- Correctness

- Readability

- Efficiency

Please do not copy answers found on the web or elsewhere as it will not benefit your learning. Searching the web for general references etc. is OK. Some discussion with friends is fine too - but again, do not just copy their answer.

Honor Code: By submitting this assignment, you certify that this is your original work.

Exercise 1 (25 points)

Use Simple Monte Carlo Integration to estimate the function

Python code to do this is provided.

Write parallel code to speed up this calculation using

ProcessPoolExecutor with concurrent.futures or

multiprocessing and as many cores as are available. Calculate the

speed-up relative to the single processor version.

In [1]:

import numpy as np

import matplotlib.pyplot as plt

%matplotlib inline

In [2]:

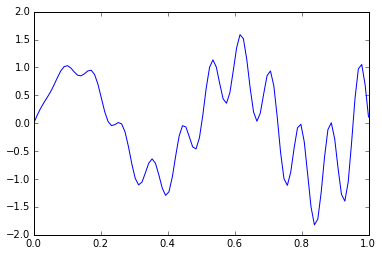

def f(x):

return x * np.cos(71*x) + np.sin(13*x)

In [3]:

x = np.linspace(0, 1, 100)

plt.plot(x, f(x))

pass

In [4]:

n = 10000000

x = np.random.random(n)

In [5]:

%%time

y = 1.0/n * np.sum(f(x))

print(y)

0.0205676875889

CPU times: user 1.34 s, sys: 290 ms, total: 1.63 s

Wall time: 1.64 s

In [6]:

import multiprocessing

workers = multiprocessing.cpu_count()

In [7]:

%%time

def g(x):

return np.sum(f(x))

parts = np.array_split(x, workers)

with multiprocessing.Pool() as pool:

y = 1.0/n * np.sum(pool.map(g, parts))

print(y)

0.0205676875889

CPU times: user 120 ms, sys: 160 ms, total: 280 ms

Wall time: 2.85 s

Exercise 2 (25 points)

Write a numba GUFunc to calculate \(Ax + b\), where \(A\) is

a \(m \times n\) matrix, \(x\) is a \(n \times 1\) vector,

and \(b\) is a \(m \times 1\) vector. Show that it works by

applying to the followng data sets. The operation done without using

GUFuncs is given.

In [8]:

m = 5

n = 4

k = 10

A = np.random.random((k,m,n))

x = np.random.random((k,n))

b = np.random.random((k,m))

In [9]:

res = np.zeros_like(b)

for i in range(k):

res[i] = A[i].dot(x[i]) + b[i]

In [10]:

res

Out[10]:

array([[ 1.35419644, 0.64082164, 0.91750864, 0.96469523, 1.35548165],

[ 1.83347738, 0.63323197, 1.34409738, 0.96622198, 1.38200563],

[ 1.12250907, 0.8616243 , 0.516737 , 0.73666376, 0.85680944],

[ 2.5532951 , 2.27305051, 1.67074558, 1.68382049, 2.68593328],

[ 1.01694983, 1.82940212, 1.04683991, 1.42589665, 2.2641885 ],

[ 1.11719829, 1.67843376, 1.24295512, 1.99321139, 1.29619135],

[ 0.76720058, 0.43195417, 0.77886426, 0.69709986, 1.40156771],

[ 1.12783795, 2.11092326, 1.0240491 , 1.74435509, 1.91984393],

[ 1.47348659, 1.09647053, 1.12408844, 2.07685308, 1.36413003],

[ 0.89961663, 0.66946045, 0.22872243, 1.25361187, 0.4674472 ]])

In [11]:

from numba import guvectorize, float64

In [12]:

@guvectorize([(float64[:, :], float64[:], float64[:], float64[:])],

'(m,n), (n), (m)->(m)')

def g(A, x, b, res):

m, n = A.shape

res += A.dot(x) + b

In [13]:

res = np.zeros_like(b)

g(A, x, b, res)

Out[13]:

array([[ 1.35419644, 0.64082164, 0.91750864, 0.96469523, 1.35548165],

[ 1.83347738, 0.63323197, 1.34409738, 0.96622198, 1.38200563],

[ 1.12250907, 0.8616243 , 0.516737 , 0.73666376, 0.85680944],

[ 2.5532951 , 2.27305051, 1.67074558, 1.68382049, 2.68593328],

[ 1.01694983, 1.82940212, 1.04683991, 1.42589665, 2.2641885 ],

[ 1.11719829, 1.67843376, 1.24295512, 1.99321139, 1.29619135],

[ 0.76720058, 0.43195417, 0.77886426, 0.69709986, 1.40156771],

[ 1.12783795, 2.11092326, 1.0240491 , 1.74435509, 1.91984393],

[ 1.47348659, 1.09647053, 1.12408844, 2.07685308, 1.36413003],

[ 0.89961663, 0.66946045, 0.22872243, 1.25361187, 0.4674472 ]])

Exercise 3 (50 points)

Wrtie a pyspark program to find the top 10 words in the English

Wikipedia dump in the data folder, using only articles from the

directories that begin with C. Words should be converted to

lowercase, stripped of all punctuation, and exclude strings consisting

of all numbers. Exclude the following stop words:

a,able,about,across,after,all,almost,also,am,among,an,and,any,are,as,at,be,because,been,but,by,can,cannot,could,dear,did,do,does,either,else,ever,every,for,from,get,got,had,has,have,he,her,hers,him,his,how,however,i,if,in,into,is,it,its,just,least,let,like,likely,may,me,might,most,must,my,neither,no,nor,not,of,off,often,on,only,or,other,our,own,rather,said,say,says,she,should,since,so,some,than,that,the,their,them,then,there,these,they,this,tis,to,too,twas,us,wants,was,we,were,what,when,where,which,while,who,whom,why,will,with,would,yet,you,your

In [14]:

stop = set('a,able,about,across,after,all,almost,also,am,among,an,and,any,are,as,at,be,because,been,but,by,can,cannot,could,dear,did,do,does,either,else,ever,every,for,from,get,got,had,has,have,he,her,hers,him,his,how,however,i,if,in,into,is,it,its,just,least,let,like,likely,may,me,might,most,must,my,neither,no,nor,not,of,off,often,on,only,or,other,our,own,rather,said,say,says,she,should,since,so,some,than,that,the,their,them,then,there,these,they,this,tis,to,too,twas,us,wants,was,we,were,what,when,where,which,while,who,whom,why,will,with,would,yet,you,your'.split(','))

In [25]:

from pyspark import SparkContext

sc = SparkContext(master = 'local[*]')

In [30]:

text = sc.textFile('data/C*/wiki*')

In [31]:

import string

def tokenize(line):

table = dict.fromkeys(map(ord, string.punctuation))

return line.translate(table).lower().strip().split()

In [32]:

freqs = (text.flatMap(lambda line: tokenize(line))

.filter(lambda word: word not in stop)

.filter(lambda word: not word.isnumeric())

.map(lambda word: (word, 1))

.reduceByKey(lambda x, y: x+y))

In [33]:

freqs.cache()

Out[33]:

PythonRDD[13] at RDD at PythonRDD.scala:43

In [34]:

freqs.takeOrdered(10, key=lambda x: -x[1])

Out[34]:

[('doc', 124923),

('first', 36136),

('one', 32562),

('new', 31637),

('two', 24565),

('school', 22103),

('during', 19674),

('time', 19179),

('more', 18006),

('years', 16832)]

In [22]:

sc.stop()