Sparse Matrices¶

In [1]:

%matplotlib inline

import numpy as np

import pandas as pd

from scipy import sparse

import scipy.sparse.linalg as spla

import matplotlib.pyplot as plt

import seaborn as sns

In [2]:

sns.set_context('notebook', font_scale=1.5)

Creating a sparse matrix¶

There are many applications in which we deal with matrices that are mostly zeros. For example, a matrix representing social networks is very sparse - there are 7 billion people, but most people are only connected to a few hundred or thousand others directly. Storing such a social network as a sparse rather than dense matrix will offer orders of magnitude reductions in memory requirements and corresponding speed-ups in computation.

Coordinate format¶

The simplest sparse matrix format is built from the coordinates and values of the non-zero entries.

From dense matrix¶

In [3]:

A = np.random.poisson(0.2, (5,15)) * np.random.randint(0, 10, (5, 15))

A

Out[3]:

array([[ 0, 0, 0, 0, 0, 0, 0, 5, 0, 0, 0, 0, 0, 4, 0],

[ 0, 0, 0, 0, 0, 0, 0, 0, 5, 0, 0, 0, 0, 0, 0],

[ 0, 0, 0, 9, 0, 0, 0, 0, 1, 0, 0, 0, 4, 0, 0],

[ 0, 0, 0, 4, 0, 0, 0, 0, 0, 10, 0, 0, 3, 1, 0],

[ 0, 0, 0, 0, 0, 3, 0, 0, 0, 0, 0, 0, 0, 0, 6]])

In [4]:

rows, cols = np.nonzero(A)

vals = A[rows, cols]

In [5]:

vals

Out[5]:

array([ 5, 4, 5, 9, 1, 4, 4, 10, 3, 1, 3, 6])

In [6]:

rows

Out[6]:

array([0, 0, 1, 2, 2, 2, 3, 3, 3, 3, 4, 4])

In [7]:

cols

Out[7]:

array([ 7, 13, 8, 3, 8, 12, 3, 9, 12, 13, 5, 14])

In [8]:

X1 = sparse.coo_matrix(A)

X1

Out[8]:

<5x15 sparse matrix of type '<class 'numpy.int64'>'

with 12 stored elements in COOrdinate format>

In [9]:

print(X1)

(0, 7) 5

(0, 13) 4

(1, 8) 5

(2, 3) 9

(2, 8) 1

(2, 12) 4

(3, 3) 4

(3, 9) 10

(3, 12) 3

(3, 13) 1

(4, 5) 3

(4, 14) 6

From coordinates¶

Note that the (values, (rows, cols)) argument is a single tuple.

In [10]:

X2 = sparse.coo_matrix((vals, (rows, cols)))

X2

Out[10]:

<5x15 sparse matrix of type '<class 'numpy.int64'>'

with 12 stored elements in COOrdinate format>

In [11]:

print(X2)

(0, 7) 5

(0, 13) 4

(1, 8) 5

(2, 3) 9

(2, 8) 1

(2, 12) 4

(3, 3) 4

(3, 9) 10

(3, 12) 3

(3, 13) 1

(4, 5) 3

(4, 14) 6

Convert back to dense matrix¶

In [12]:

X2.todense()

Out[12]:

matrix([[ 0, 0, 0, 0, 0, 0, 0, 5, 0, 0, 0, 0, 0, 4, 0],

[ 0, 0, 0, 0, 0, 0, 0, 0, 5, 0, 0, 0, 0, 0, 0],

[ 0, 0, 0, 9, 0, 0, 0, 0, 1, 0, 0, 0, 4, 0, 0],

[ 0, 0, 0, 4, 0, 0, 0, 0, 0, 10, 0, 0, 3, 1, 0],

[ 0, 0, 0, 0, 0, 3, 0, 0, 0, 0, 0, 0, 0, 0, 6]])

Compressed Sparse Row and Column formats¶

When we have repeated entries in the rows or cols, we can remove the redundancy by indicating the location of the first occurrence of a value and its increment instead of the full coordinates. Note that the final index location must be the number of rows or cols since there is no other way to know the shape. These are known as CSR or CSC formats.

In [13]:

np.vstack([rows, cols])

Out[13]:

array([[ 0, 0, 1, 2, 2, 2, 3, 3, 3, 3, 4, 4],

[ 7, 13, 8, 3, 8, 12, 3, 9, 12, 13, 5, 14]])

In [14]:

indptr = np.r_[np.searchsorted(rows, np.unique(rows)), len(rows)]

indptr

Out[14]:

array([ 0, 2, 3, 6, 10, 12])

In [15]:

X3 = sparse.csr_matrix((vals, cols, indptr))

X3

Out[15]:

<5x15 sparse matrix of type '<class 'numpy.int64'>'

with 12 stored elements in Compressed Sparse Row format>

In [16]:

print(X3)

(0, 7) 5

(0, 13) 4

(1, 8) 5

(2, 3) 9

(2, 8) 1

(2, 12) 4

(3, 3) 4

(3, 9) 10

(3, 12) 3

(3, 13) 1

(4, 5) 3

(4, 14) 6

In [17]:

X3.todense()

Out[17]:

matrix([[ 0, 0, 0, 0, 0, 0, 0, 5, 0, 0, 0, 0, 0, 4, 0],

[ 0, 0, 0, 0, 0, 0, 0, 0, 5, 0, 0, 0, 0, 0, 0],

[ 0, 0, 0, 9, 0, 0, 0, 0, 1, 0, 0, 0, 4, 0, 0],

[ 0, 0, 0, 4, 0, 0, 0, 0, 0, 10, 0, 0, 3, 1, 0],

[ 0, 0, 0, 0, 0, 3, 0, 0, 0, 0, 0, 0, 0, 0, 6]])

Because the coordinate format is more intuitive, it is often more convenient to first create a COO matrix then cast to CSR or CSC form.

In [18]:

X4 = X2.tocsr()

In [19]:

X4

Out[19]:

<5x15 sparse matrix of type '<class 'numpy.int64'>'

with 12 stored elements in Compressed Sparse Row format>

COO summation convention¶

When entries are repeated in a sparse matrix, they are summed. This provides a quick way to construct confusion matrices for evaluation of multi-class classification algorithms.

In [20]:

rows = np.repeat([0,1], 4)

cols = np.repeat([0,1], 4)

vals = np.arange(8)

In [21]:

rows

Out[21]:

array([0, 0, 0, 0, 1, 1, 1, 1])

In [22]:

cols

Out[22]:

array([0, 0, 0, 0, 1, 1, 1, 1])

In [23]:

vals

Out[23]:

array([0, 1, 2, 3, 4, 5, 6, 7])

In [24]:

X5 = sparse.coo_matrix((vals, (rows, cols)))

In [25]:

X5.todense()

Out[25]:

matrix([[ 6, 0],

[ 0, 22]])

In [26]:

obs = np.random.randint(0, 2, 100)

pred = np.random.randint(0, 2, 100)

vals = np.ones(100).astype('int')

In [27]:

pred

Out[27]:

array([0, 1, 0, 0, 1, 0, 1, 0, 0, 0, 0, 0, 0, 0, 1, 1, 1, 1, 1, 1, 0, 0,

0, 0, 0, 1, 0, 1, 1, 1, 0, 1, 1, 0, 1, 0, 1, 0, 1, 1, 0, 0, 0, 0,

1, 1, 0, 1, 0, 0, 0, 0, 1, 0, 1, 0, 0, 0, 1, 1, 1, 0, 1, 1, 1, 1,

1, 0, 1, 1, 0, 1, 1, 1, 1, 1, 0, 0, 1, 0, 0, 0, 1, 0, 0, 0, 1, 0,

1, 0, 1, 0, 1, 1, 1, 0, 0, 0, 0, 0])

In [28]:

vals.shape, obs.shape , pred.shape

Out[28]:

((100,), (100,), (100,))

In [29]:

X6 = sparse.coo_matrix((vals, (pred, obs)))

In [30]:

X6.todense()

Out[30]:

matrix([[31, 22],

[29, 18]])

For classifications with a large number of classes (e.g. image segmentation), the savings are even more dramatic.

In [31]:

from sklearn import datasets

from sklearn.model_selection import train_test_split

from sklearn.neighbors import KNeighborsClassifier

In [32]:

iris = datasets.load_iris()

In [33]:

knn = KNeighborsClassifier()

X_train, X_test, y_train, y_test = train_test_split(iris.data, iris.target,

test_size=0.5, random_state=42)

In [34]:

pred = knn.fit(X_train, y_train).predict(X_test)

In [35]:

pred

Out[35]:

array([1, 0, 2, 1, 1, 0, 1, 2, 1, 1, 2, 0, 0, 0, 0, 1, 2, 1, 1, 2, 0, 1,

0, 2, 2, 2, 2, 2, 0, 0, 0, 0, 1, 0, 0, 2, 1, 0, 0, 0, 2, 1, 1, 0,

0, 1, 1, 2, 1, 2, 1, 2, 1, 0, 2, 1, 0, 0, 0, 1, 1, 0, 0, 0, 1, 0,

1, 2, 0, 1, 2, 0, 1, 2, 1])

In [36]:

y_test

Out[36]:

array([1, 0, 2, 1, 1, 0, 1, 2, 1, 1, 2, 0, 0, 0, 0, 1, 2, 1, 1, 2, 0, 2,

0, 2, 2, 2, 2, 2, 0, 0, 0, 0, 1, 0, 0, 2, 1, 0, 0, 0, 2, 1, 1, 0,

0, 1, 2, 2, 1, 2, 1, 2, 1, 0, 2, 1, 0, 0, 0, 1, 2, 0, 0, 0, 1, 0,

1, 2, 0, 1, 2, 0, 2, 2, 1])

In [37]:

X7 = sparse.coo_matrix((np.ones(len(pred)).astype('int'), (pred, y_test)))

pd.DataFrame(X7.todense(), index=iris.target_names, columns=iris.target_names)

Out[37]:

| setosa | versicolor | virginica | |

|---|---|---|---|

| setosa | 29 | 0 | 0 |

| versicolor | 0 | 23 | 4 |

| virginica | 0 | 0 | 19 |

In [38]:

X7.todense()

Out[38]:

matrix([[29, 0, 0],

[ 0, 23, 4],

[ 0, 0, 19]])

Solving large sparse linear systems¶

SciPy provides efficient routines for solving large sparse systems as for dense matrices. We will illustrate by calculating the page rank for airports using data from the Bureau of Transportation Statisitcs. The PageRank algorithm is used to rank web pages for search results, but it can be used to rank any node in a directed graph (here we have airports instead of web pages). PageRank is fundamentally about finding the steady state in a Markov chain and can be solved as a linear system.

The update at each time step for the page rank \(PR\) of a page \(p_i\) is

i0¶

In the above equation, \(B_u\) is the set of all nodes \(v\) that link to \(u\), where each \(v\) node contributes its page rank divided by its number of outgoing links \(L(v)\). So a node \(v\) with a high page rank contributes a large value to a linked node \(u\) if \(v\) has relatively few other links.

i0¶

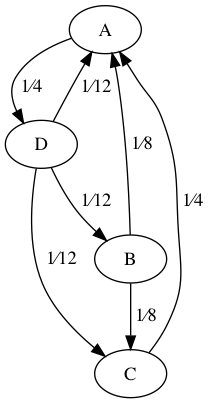

The figure shows a network with four nodes, all of which start with a page rank of \(1/4\). The values on the edges shows how much of its page rank one nodes contributes to its linked nodes in the first step.

By letting the sum of all page ranks to be equal to one, we essentially have a probability distribution over the nodes of the graph. Since the state of the graph only depends on its previous state, we have a Markov chain. If we assume that every node can be reached from every other node, the system will have a steady state - which is what the PageRank algorithm seeks to find. To guard against case where a node has out-degree 0, we allow every node a small random chance of transitioning to any other node using a damping factor \(d\). Then we solve the linear system to find the pagerank score \(R\).

i1¶

In matrix notation, this is

i2¶

where

i2.5¶

At steady state,

i3¶

and we can rearrange terms to solve for \(R\)

i4¶

In [39]:

data = pd.read_csv('data/airports.csv', usecols=[0,1])

In [40]:

data.shape

Out[40]:

(445827, 2)

In [41]:

data.head()

Out[41]:

| ORIGIN_AIRPORT_ID | DEST_AIRPORT_ID | |

|---|---|---|

| 0 | 10135 | 10397 |

| 1 | 10135 | 10397 |

| 2 | 10135 | 10397 |

| 3 | 10135 | 10397 |

| 4 | 10135 | 10397 |

In [42]:

lookup = pd.read_csv('data/names.csv', index_col=0)

In [43]:

lookup.shape

Out[43]:

(6404, 1)

In [44]:

lookup.head()

Out[44]:

| Description | |

|---|---|

| Code | |

| 10001 | Afognak Lake, AK: Afognak Lake Airport |

| 10003 | Granite Mountain, AK: Bear Creek Mining Strip |

| 10004 | Lik, AK: Lik Mining Camp |

| 10005 | Little Squaw, AK: Little Squaw Airport |

| 10006 | Kizhuyak, AK: Kizhuyak Bay |

In [45]:

import networkx as nx

In [46]:

g = nx.from_pandas_edgelist(data, source='ORIGIN_AIRPORT_ID', target='DEST_AIRPORT_ID')

In [47]:

airports = np.array(g.nodes())

adj_matrix = nx.to_scipy_sparse_matrix(g)

In [48]:

out_degrees = np.ravel(adj_matrix.sum(axis=1))

diag_matrix = sparse.diags(1 / out_degrees).tocsr()

M = (diag_matrix @ adj_matrix).T

In [49]:

n = len(airports)

d = 0.85

I = sparse.eye(n, format='csc')

A = I - d * M

b = (1-d) / n * np.ones(n) # so the sum of all page ranks is 1

In [50]:

A.todense()

Out[50]:

matrix([[ 1. , -0.00537975, -0.0085 , ..., 0. ,

0. , 0. ],

[-0.28333333, 1. , -0.0085 , ..., 0. ,

0. , 0. ],

[-0.28333333, -0.00537975, 1. , ..., 0. ,

0. , 0. ],

...,

[ 0. , 0. , 0. , ..., 1. ,

0. , 0. ],

[ 0. , 0. , 0. , ..., 0. ,

1. , 0. ],

[ 0. , 0. , 0. , ..., 0. ,

0. , 1. ]])

In [51]:

from scipy.sparse.linalg import spsolve

In [52]:

r = spsolve(A, b)

r.sum()

Out[52]:

0.9999999999999998

In [53]:

idx = np.argsort(r)

In [54]:

top10 = idx[-10:][::-1]

bot10 = idx[:10]

In [55]:

df = lookup.loc[airports[top10]]

df['degree'] = out_degrees[top10]

df['pagerank']= r[top10]

df

Out[55]:

| Description | degree | pagerank | |

|---|---|---|---|

| Code | |||

| 10397 | Atlanta, GA: Hartsfield-Jackson Atlanta Intern... | 158 | 0.043286 |

| 13930 | Chicago, IL: Chicago O'Hare International | 139 | 0.033956 |

| 11292 | Denver, CO: Denver International | 129 | 0.031434 |

| 11298 | Dallas/Fort Worth, TX: Dallas/Fort Worth Inter... | 108 | 0.027596 |

| 13487 | Minneapolis, MN: Minneapolis-St Paul Internati... | 108 | 0.027511 |

| 12266 | Houston, TX: George Bush Intercontinental/Houston | 110 | 0.025967 |

| 11433 | Detroit, MI: Detroit Metro Wayne County | 100 | 0.024738 |

| 14869 | Salt Lake City, UT: Salt Lake City International | 78 | 0.019298 |

| 14771 | San Francisco, CA: San Francisco International | 76 | 0.017820 |

| 14107 | Phoenix, AZ: Phoenix Sky Harbor International | 79 | 0.017000 |

In [56]:

df = lookup.loc[airports[bot10]]

df['degree'] = out_degrees[bot10]

df['pagerank']= r[bot10]

df

Out[56]:

| Description | degree | pagerank | |

|---|---|---|---|

| Code | |||

| 12265 | Niagara Falls, NY: Niagara Falls International | 1 | 0.000693 |

| 14025 | Plattsburgh, NY: Plattsburgh International | 1 | 0.000693 |

| 16218 | Yuma, AZ: Yuma MCAS/Yuma International | 1 | 0.000693 |

| 11695 | Flagstaff, AZ: Flagstaff Pulliam | 1 | 0.000693 |

| 10157 | Arcata/Eureka, CA: Arcata | 1 | 0.000710 |

| 14905 | Santa Maria, CA: Santa Maria Public/Capt. G. A... | 1 | 0.000710 |

| 14487 | Redding, CA: Redding Municipal | 1 | 0.000710 |

| 13964 | North Bend/Coos Bay, OR: Southwest Oregon Regi... | 1 | 0.000710 |

| 11049 | College Station/Bryan, TX: Easterwood Field | 1 | 0.000711 |

| 12177 | Hobbs, NM: Lea County Regional | 1 | 0.000711 |

In [57]:

labels = {airports[i]: lookup.loc[airports[i]].str.split(':').str[0].values[0]

for i in np.r_[top10[:5], bot10[:5]]}

nx.draw(g, pos=nx.spring_layout(g), labels=labels,

node_color='blue', font_color='red', alpha=0.5,

node_size=np.clip(5000*r, 1, 5000*r), width=0.1)

In [ ]: