Scalars¶

[1]:

%matplotlib inline

[2]:

import numpy as np

import matplotlib.pyplot as plt

Integers¶

Bit shifting¶

[4]:

format(16 >> 2, '032b')

[4]:

'00000000000000000000000000000100'

[5]:

16 >> 2

[5]:

4

[6]:

format(16 << 2, '032b')

[6]:

'00000000000000000000000001000000'

[7]:

16 << 2

[7]:

64

Endianess¶

This refers to how the bytes that make up an integer are stored in the computer. Big Endian means that the most significant byte (8 bits) is stored at the lowest address, while Little Endian means that the least significant byte is stored at the lowest address.

Source: https://chortle.ccsu.edu/AssemblyTutorial/Chapter-15/bigLittleEndian.gif

Overflow¶

In general, the computer representation of integers has a limited range, and may overflow. The range depends on whether the integer is signed or unsigned.

For example, with 8 bits, we can represent at most \(2^8 = 256\) integers.

0 to 255 unsigned

-128 ti 127 signed

Signed integers

[8]:

np.arange(130, dtype=np.int8)[-5:]

[8]:

array([ 125, 126, 127, -128, -127], dtype=int8)

Unsigned integers

[9]:

np.arange(130, dtype=np.uint8)[-5:]

[9]:

array([125, 126, 127, 128, 129], dtype=uint8)

[10]:

np.arange(260, dtype=np.uint8)[-5:]

[10]:

array([255, 0, 1, 2, 3], dtype=uint8)

Integer division¶

In Python 2 or other languages such as C/C++, be very careful when dividing as the division operator / performs integer division when both numerator and denominator are integers. This is rarely what you want. In Python 3 the / always performs floating point division, and you use // for integer division, removing a common source of bugs in numerical calculations.

[11]:

%%python2

import numpy as np

x = np.arange(10)

print(x/10)

Traceback (most recent call last):

File "<stdin>", line 2, in <module>

ImportError: No module named numpy

Python 3 does the “right” thing.

[12]:

x = np.arange(10)

x/10

[12]:

array([0. , 0.1, 0.2, 0.3, 0.4, 0.5, 0.6, 0.7, 0.8, 0.9])

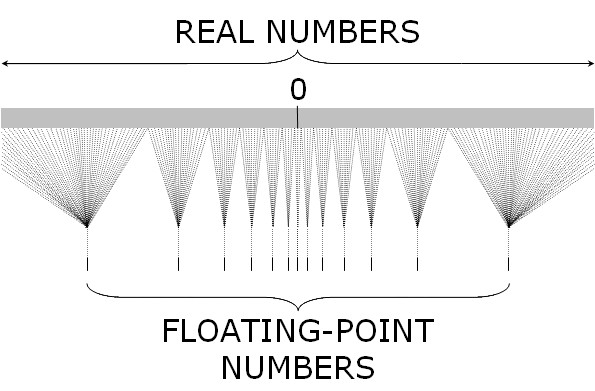

Real numbers¶

Real numbers are represented as floating point numbers. A floating point number is stored in 3 pieces (sign bit, exponent, mantissa) so that every float is represetned as get +/- mantissa ^ exponent. Because of this, the interval between consecutive numbers is smallest (high precison) for numebrs close to 0 and largest for numbers close to the lower and upper bounds.

Because exponents have to be singed to represent both small and large numbers, but it is more convenint to use unsigned numbers here, the exponnent has an offset (also knwnn as the exponentn bias). For example, if the expoennt is an unsigned 8-bit number, it can rerpesent the range (0, 255). By using an offset of 128, it will now represent the range (-127, 128).

Note: Intervals between consecutive floating point numbers are not constant. In particular, the precision for small numbers is much larger than for large numbers. In fact, approximately half of all floating point numbers lie between -1 and 1 when using the double type in C/C++ (also the default for numpy).

Because of this, if you are adding many numbers, it is more accurate to first add the small numbers before the large numbers.

See Wikipedia for how this binary number is evaluated to 0.15625.

[13]:

from ctypes import c_int, c_float

[14]:

s = c_int.from_buffer(c_float(0.15625)).value

[15]:

s = format(s, '032b')

s

[15]:

'00111110001000000000000000000000'

[16]:

rep = {

'sign': s[:1],

'exponent' : s[1:9:],

'fraction' : s[9:]

}

rep

[16]:

{'exponent': '01111100', 'fraction': '01000000000000000000000', 'sign': '0'}

Most base 10 real numbers are approximations¶

This is simply because numbers are stored in finite-precision binary format.

[17]:

'%.20f' % (0.1 * 0.1 * 100)

[17]:

'1.00000000000000022204'

Never check for equality of floating point numbers¶

[18]:

i = 0

loops = 0

while i != 1:

i += 0.1 * 0.1

loops += 1

if loops == 1000000:

break

i

[18]:

10000.000000171856

[19]:

i = 0

loops = 0

while np.abs(1 - i) > 1e-6:

i += 0.1 * 0.1

loops += 1

if loops == 1000000:

break

i

[19]:

1.0000000000000007

Associative law does not necessarily hold¶

[20]:

6.022e23 - 6.022e23 + 1

[20]:

1.0

[21]:

1 + 6.022e23 - 6.022e23

[21]:

0.0

Distributive law does not hold¶

[22]:

a = np.exp(1)

b = np.pi

c = np.sin(1)

[23]:

a*(b+c)

[23]:

10.82708950985241

[24]:

a*b + a*c

[24]:

10.827089509852408

Catastrophic cancellation¶

Consider calculating sample variance

Be careful whenever you calculate the difference of potentially big numbers.

[25]:

def var(x):

"""Returns variance of sample data using sum of squares formula."""

n = len(x)

return (1.0/(n*(n-1))*(n*np.sum(x**2) - (np.sum(x))**2))

Numerically stable algorithms¶

What is the sample variance for numbers from a normal distribution with variance 1?¶

[26]:

np.random.seed(15)

x_ = np.random.normal(0, 1, int(1e6))

x = 1e12 + x_

var(x)

[26]:

-1328166901.4739892

[27]:

np.var(x)

[27]:

1.001345504504934

Underflow¶

[28]:

np.warnings.filterwarnings('ignore')

[29]:

np.random.seed(4)

xs = np.random.random(1000)

ys = np.random.random(1000)

np.prod(xs)/np.prod(ys)

[29]:

nan

Prevent underflow by staying in log space¶

[30]:

x = np.sum(np.log(xs))

y = np.sum(np.log(ys))

np.exp(x - y)

[30]:

696432868222.2549

Overflow¶

Let’s calculate

Using basic algebra, we get the solution \(\log(2) + 1000\).

[31]:

x = np.array([1000, 1000])

np.log(np.sum(np.exp(x)))

[31]:

inf

[32]:

np.logaddexp(*x)

[32]:

1000.6931471805599

logsumexp

This function generalizes logaddexp to an arbitrary number of addends and is useful in a variety of statistical contexts.

Suppose we need to calculate a probability distribution \(\pi\) parameterized by a vector \(x\)

Taking logs, we get

[33]:

x = 1e6*np.random.random(100)

[34]:

np.log(np.sum(np.exp(x)))

[34]:

inf

[35]:

from scipy.special import logsumexp

[36]:

logsumexp(x)

[36]:

989279.6334854055

Other useful numerically stable functions¶

logp1 and expm1

[37]:

np.exp(np.log(1 + 1e-6)) - 1

[37]:

9.999999999177334e-07

[38]:

np.expm1(np.log1p(1e-6))

[38]:

1e-06

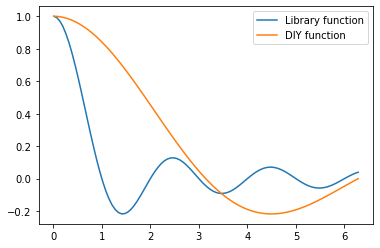

sinc

[39]:

x = 1

[40]:

np.sin(x)/x

[40]:

0.8414709848078965

[41]:

np.sinc(x)

[41]:

3.8981718325193755e-17

[42]:

x = np.linspace(0.01, 2*np.pi, 100)

[43]:

plt.plot(x, np.sinc(x), label='Library function')

plt.plot(x, np.sin(x)/x, label='DIY function')

plt.legend()

pass